Introduction

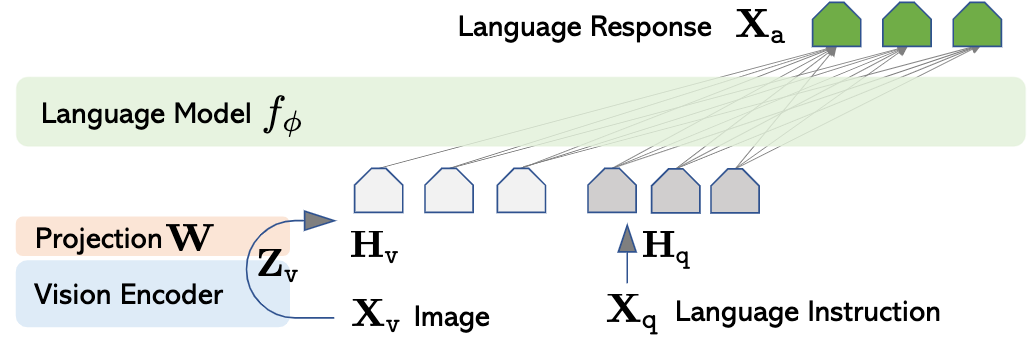

A multimodal large language model (MLLM) usually consists of three parts: an encoder $E$ that ingests the information from different modality, a large language model (LLM) that is corresponds to complete various of downstream tasks given multimodal input such as image and text, and an adaption layer $C$ that aligns features of different modality to word embedding space of the LLM. Below is an example MLLM adopting aforementioned architecture: LLaVA [1]

Efforts have been made to improve the performance of MLLMs. In this post, we aim to review the design of adaption layer and its potential effect on the downstream tasks.

Method

Suppose the hidden size of the LLM is $d$, the feature produced by encoder $E$ is $V\in\mathbb{R}^{P\times d_v}$, where $P$ is the number of features (number of visual patches if $E$ is an visual encoder) and $d_v$ is the channel dimension. The adaption layer $C$ then aligns the feature $V$ with the word embedding space with $x=C(V)\in\mathbb{R}^{Q\times d}$, where $Q$ is the number of tokens. As we can see, $C$ is actually a mapping from $\mathbb{R}^{P\times d_v}$ to $\mathbb{R}^{Q\times d}$.

Based on relationship between $d_v$ and $d$, we can divide projection layers into two types:

- Feature-preserving adaption layer, where $P=Q$

- Feature-compressing adaption layer, where $P>Q$.

Feature-preserving adaption layer

$$ x = VW^T, \text{ where } W\in\mathbb{R}^{d\times d_v}$$the code reads as:

| |

where $W_1\in\mathbb{R}^{d\times d_v}$, $W_2\in\mathbb{R}^{d\times d}$, $\phi$ is a activation function, specified as nn.GELU(). The code reads as:

| |

Feature-compressing adaption layer

The feature compression adaption layers can be categorized into three types:

- average pooling

- attention pooling

- convolution mapping

They usually comprise two steps:

- reduce the number of features from $P$ to $Q$ with a pooling operation: $$ f' = \mathcal{P}(f)\in\mathbb{R}^{Q\times d_v} $$

- project compressed features $f’$ to word embedding space with a transformation $\mathcal{T}$: $$ x = \mathcal{T}(f')\in\mathbb{R}^{Q\times d} $$

where $W_k, W_v\in\mathbb{R}^{d_c\times d_v}$ and $Q\in\mathbb{R}^{Q\times d_c}$ is a learnable query.

| |

where $W=[w_1,\dots,w_n]^T\in\mathbb{R}^n$ and $W’=[w_1,\dots,w_n]^T\in\mathbb{R}^{2K}$ are the weights of the convolution layers.

D-Abstractor aa

MEQ-Former

LDPv2

VSS